TLDR: We stylize 3D Gaussian Splats with a text prompt by rendering views, editing them with a diffusion model given a text prompt, and then retraining the splat. To edit the views consistently, we use modified depth-guided cross-attention and a task-specific controlnet, with separate controls for depth and RGB edit strength letting you set strength for shape and color separately.

*denotes equal contribution.Summary

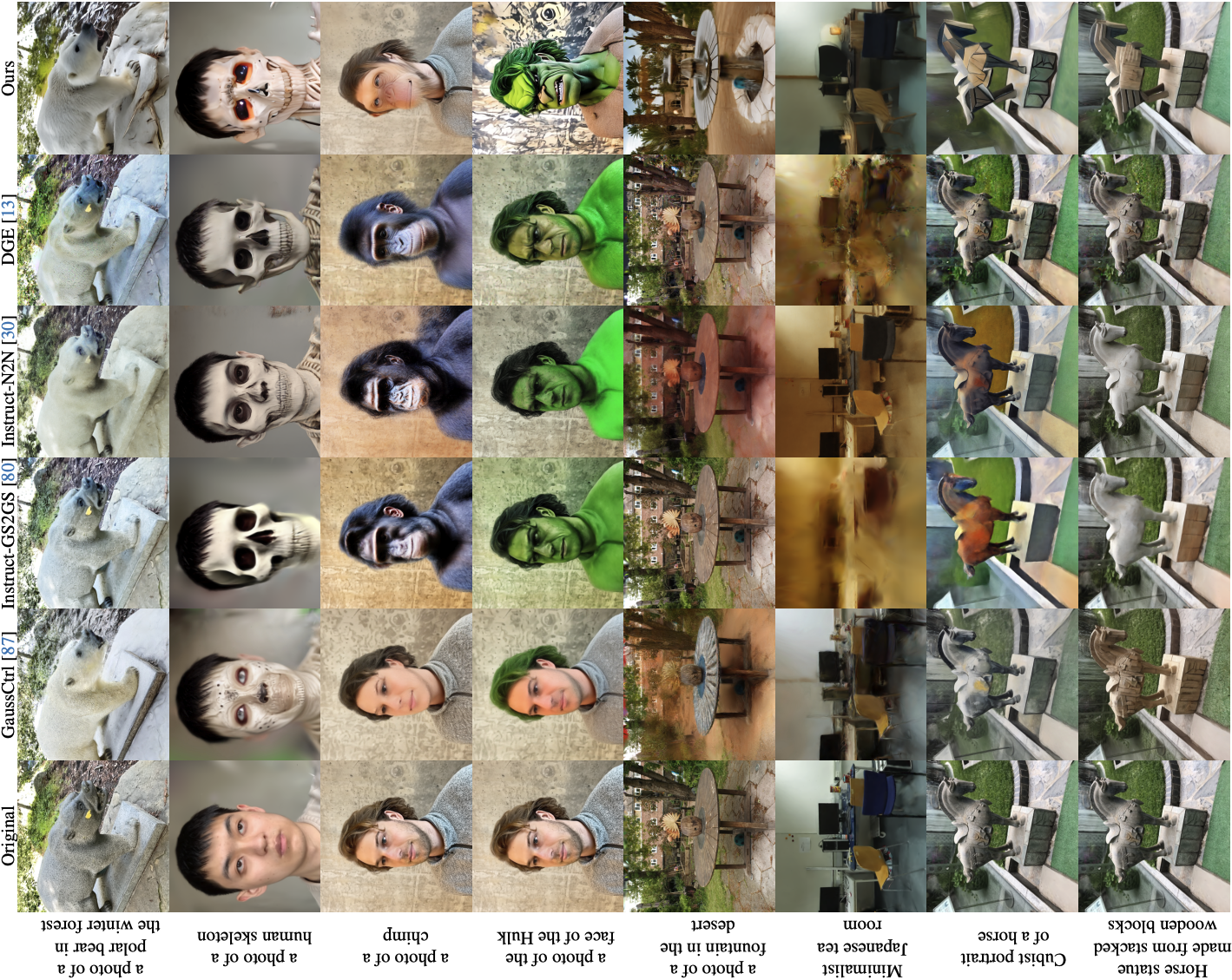

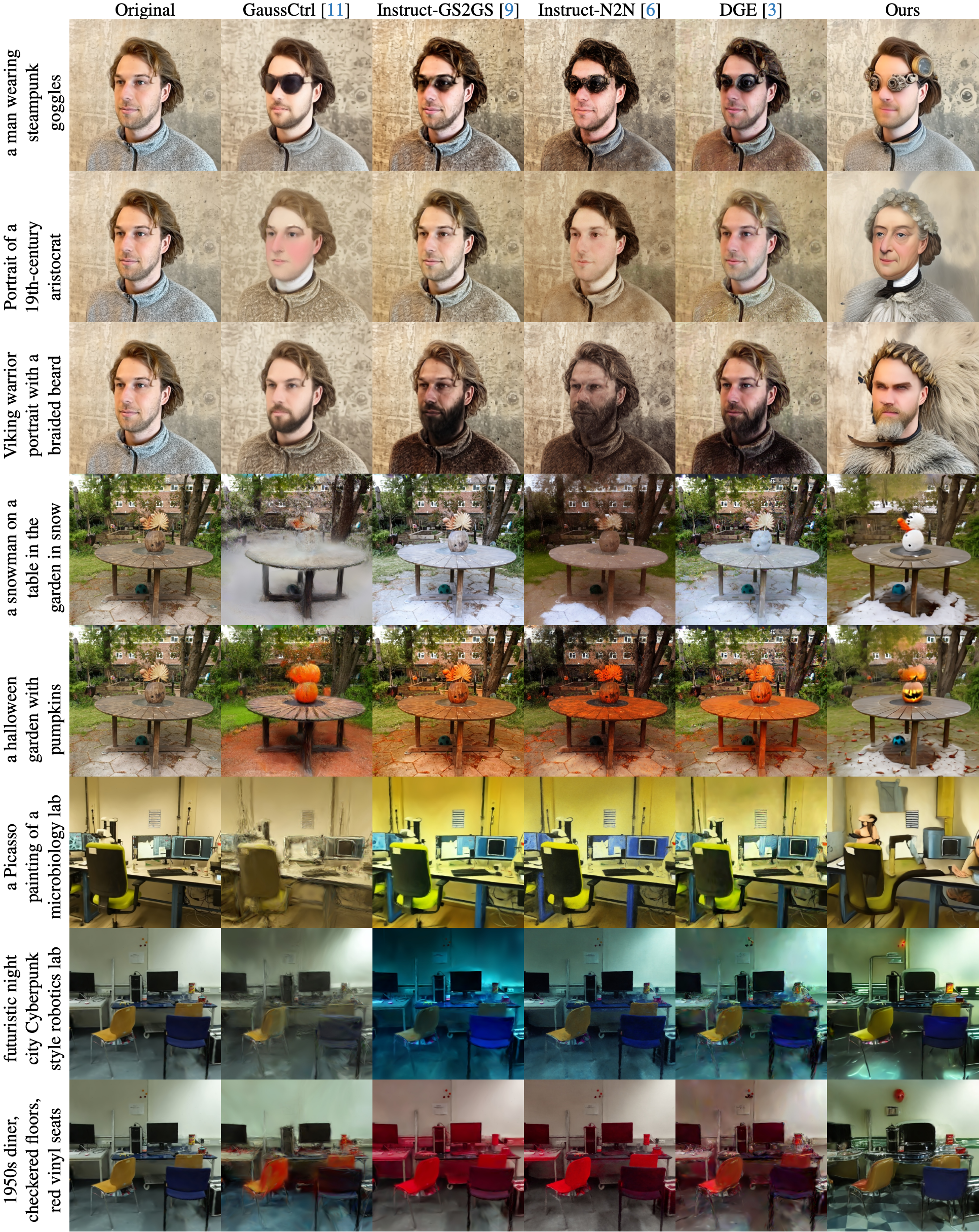

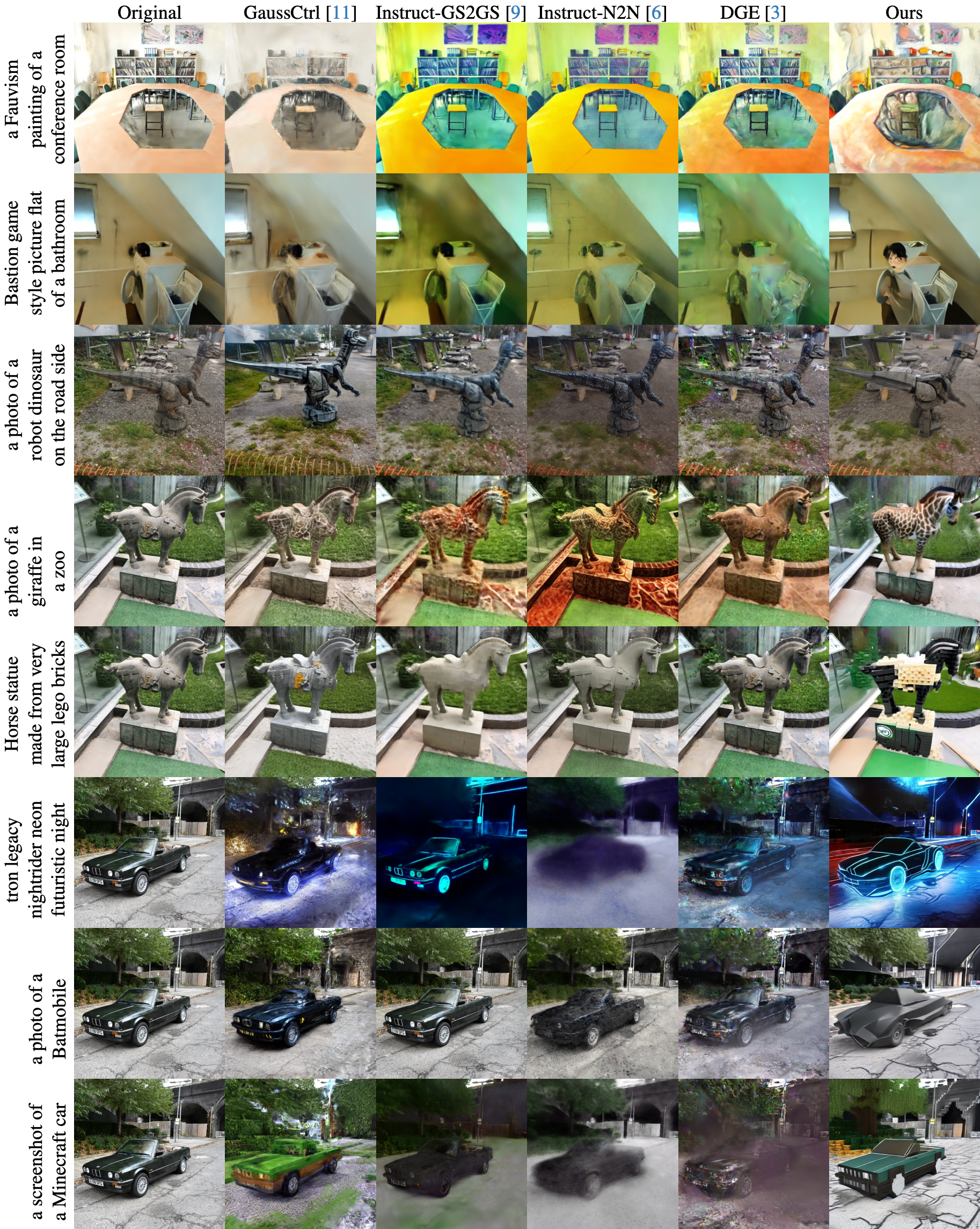

Exploring real-world spaces using novel-view synthesis is exciting, and reimagining those worlds in different styles adds another layer of fun. Stylized worlds can also be used for tasks with limited training data to expand a model's training distribution. However, current techniques often struggle to convincingly change geometry due to stability and consistency issues.

We propose a new autoregressive 3D Gaussian Splatting stylization method, introducing an RGBD diffusion model that allows for independent control over appearance and shape stylization. To ensure consistency across frames, we use depth-guided cross attention, feature injection, and a Warp ControlNet conditioned on composite frames.

Our method is validated through extensive qualitative results, quantitative experiments, and a user study.

Method

Overview

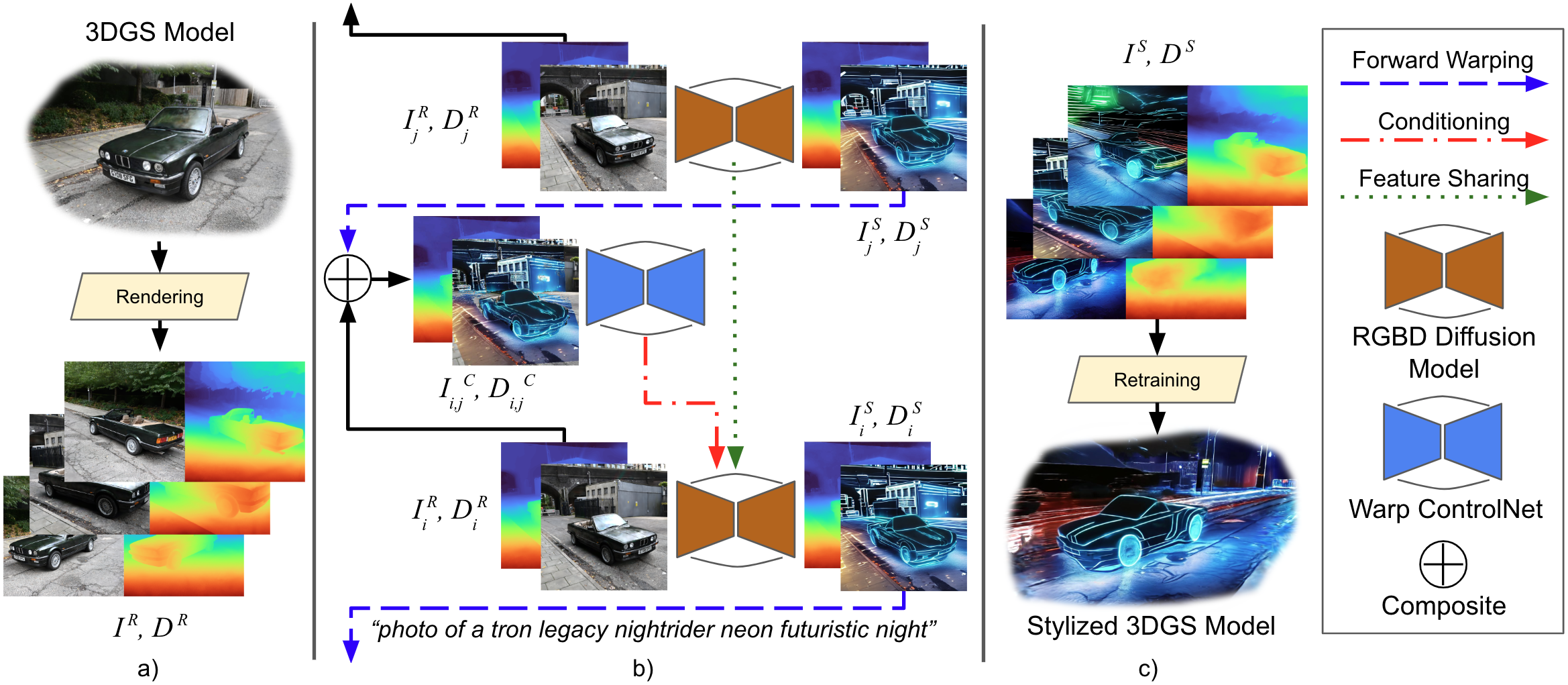

We use RGBD views as an intermediate representation for stylization: we render RGBD views of the input splat, stylize them, and then train an output splat.

RGBD diffusion model

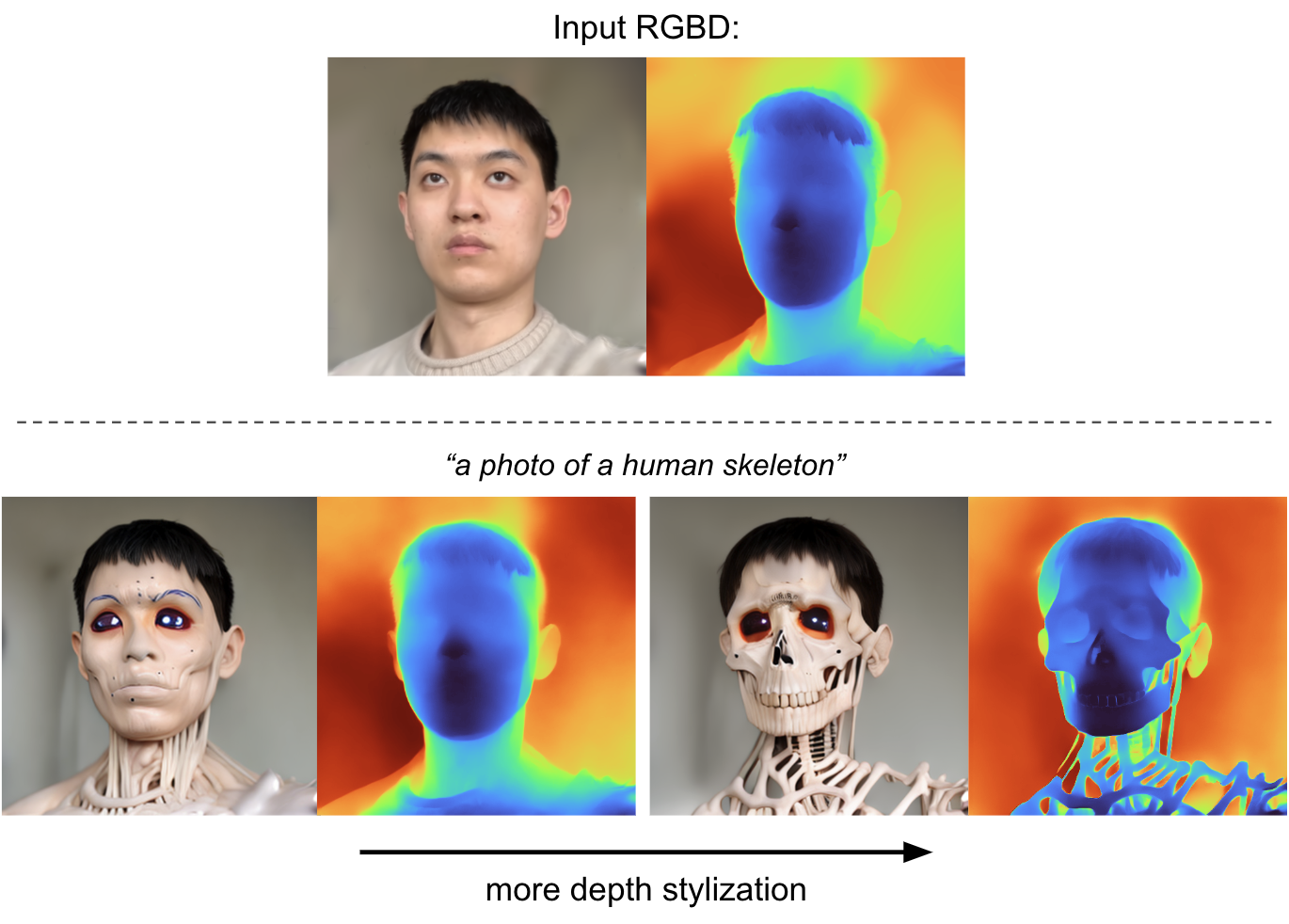

We add extra input and output channels to Stable Diffusion for depth, and train it to generate RGBD images. We add separate time parameters for depth and RGB, enabling independent control of how much to edit appearance and geometry.

Multiview-consistent Stylization

We render RGBD views of the input splat and stylize them autogressively, each view conditional on the previously stylized views. We use a task-specific ControlNet and modified cross-attention to condition on previously stylized views. After stylizing, we train another splat on our stylized views.

Interactive results

For a given stylization prompt, we can modify either color strength or shape modification strength for the stylized 3D Gaussian Splat. Try it yourself with renders of those splats!

Fixed Color stylization strength: 0.70

Variable Color stylization strength: 0.00

More results

Resources

Coming soon!